Item Fit – Statistical and Practical Significance of Item Misfit in Educational Testing

In the context of large-scale educational assessment, items showing model misfit are tested for statistical respectively practical significance.

Project Description

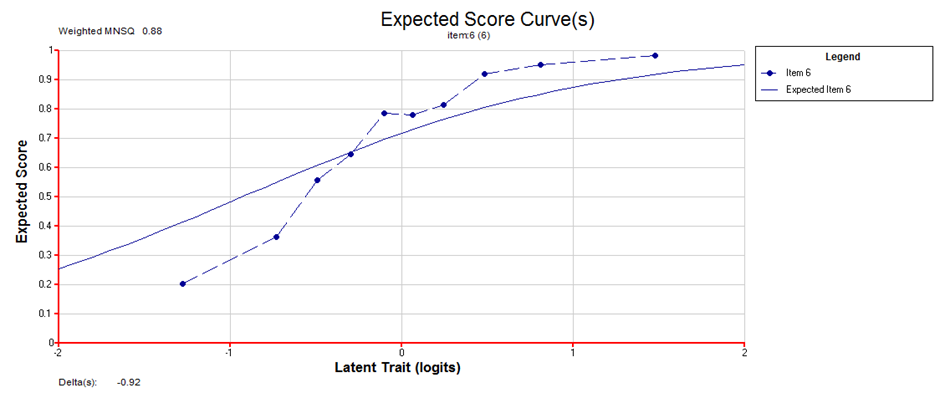

Generally, statistical analyses of large-scale educational assessments are based on measurement models from Item Response Theory (IRT). Valid statements can only be made if the assessed data fit the IR measurement model. To evaluate model fit, it is, for example, necessary to test for item fit, that is testing whether and how well observed responses to an item fit the expected answers (see figure). Many fit statistics exist and besides, different cut-off scores can be found within studies for same but also different fit statistics. Hence, no clear picture emerges concerning the conditions for item exclusion or treatment as a fit. It is furthermore interesting to take a closer look at the practical significance of item misfits.

Project Objectives

Following the situation outlined above, two objectives can be identified for the project:

- Deduction of guidelines for large-scale educational assessments which allow for recommendations on item fit statistics and corresponding cut-off-criteria

- Development of approaches and methods in the area of correlation analyses and comparison of competencies over time for the determination of practical significance of item misfits.

To achieve the objectives, the project first draws on simulation studies. Accordingly, separate factors can be analysed regarding their impact on fit measures. Moreover, effect sizes will be assessed for the item misfits, which are closely linked to the size of model violation.

Regarding the second project objective, empirical data will be considered to validate findings with respect to methods of assessing the practical significance of item misfits. Since current research focuses mostly on main survey data, which is accompanied by a pre-selection of items, to investigate the practical significance of item misfit, field test data will be included in this project. In cooperation with the Centre for International Student Assessment (ZIB) field test data from PISA 2018 will be used to discuss criteria for assessing the significance of item misfit and to apply these criteria to specific questions. This includes, for example, the evaluation of how significant the differences in the distribution of competencies of the person parameters are to be assessed with and without the inclusion of statistically misfitting items.

Funding

The project is funded by the German Research Association (Deutsche Forschungsgemeinschaft, DFG) (KO 5637/1-1).

Cooperations

Dr. Alexander Robitzsch (Leibniz Institute for Science and Mathematics Education, [IPN], Kiel)

Prof. Dr. Matthias von Davier (Lynch School of Education and Human Development, Chestnut Hill, MA, USA)

Dr. Jörg-Henrik Heine (Zentrum für internationale Bildungsvergleichsstudien, München)

Project Management

Project Details

| Status: |

Completed Projects

|

|---|---|

| Department: | Teacher and Teaching Quality |

| Duration: |

04/2018 – 07/2021

|

| Funding: |

External funding

|